The Inevitable Conflict: Cloud AI vs. Data Sovereignty

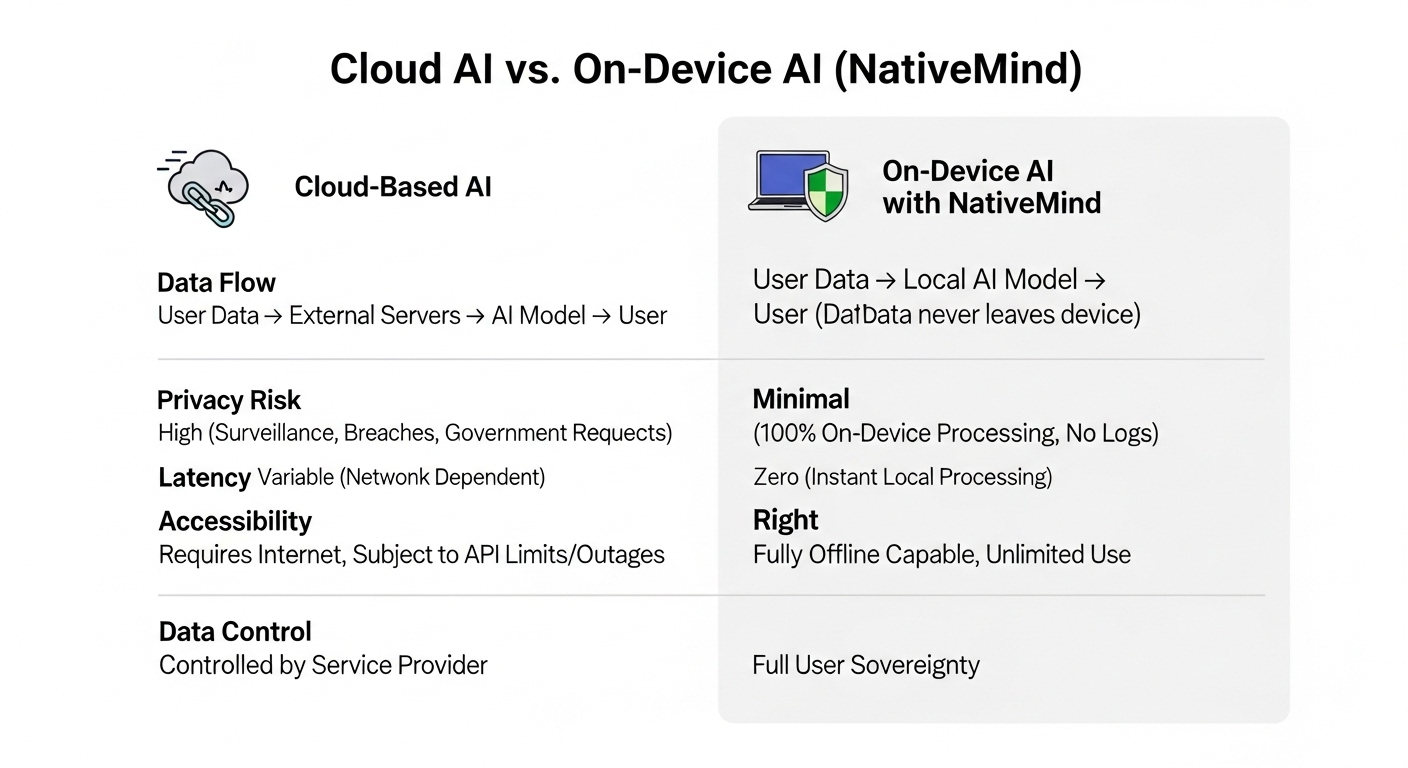

The proliferation of cloud-based AI services presents a fundamental conflict for enterprises and professionals handling sensitive information. Reliance on these external platforms creates inherent data sovereignty and privacy vulnerabilities. The core risk, as highlighted in our sources, is that proprietary or confidential data sent to the cloud can be monitored, repurposed for model training, or inadvertently exposed through security breaches. This trade-off between AI-driven productivity and data security is a critical challenge.

Addressing this conflict directly, a new class of on-device AI tools is emerging. NativeMind exemplifies this approach, engineered to operate with zero cloud dependencies. By processing all data locally on the user's machine, it eliminates the transmission of sensitive information to third-party servers, thereby mitigating the associated privacy and security risks. This article provides a technical analysis of NativeMind, evaluating its practical applications for professional workflows and its performance capabilities in a completely offline environment.

Beyond the Hype: The On-Device Performance Revolution

The long-held assumption that on-device AI necessitates a performance trade-off is now obsolete. A new generation of compact, highly optimized models demonstrates that local processing can match and even exceed the capabilities of larger, cloud-based systems. This marks a significant paradigm shift, where elite performance and data privacy are no longer mutually exclusive.

Verifiable benchmarks from our sources confirm this breakthrough. The compact Qwen3-4B model, for example, now outperforms the 18x larger, cloud-hosted Qwen2.5-72B. Similarly, the Gemma3-4B model achieves performance parity with the 6.75x larger Gemma2-27B. In targeted evaluations, Microsoft's local-first Phi-4 model has even surpassed the formidable Gemini Pro 1.5 in specific reasoning tasks.

For the professional user, the practical benefits are immediate and substantial. By operating entirely on the user's machine, these models eliminate network latency, providing instantaneous responses for a fluid workflow. This local-first architecture also circumvents the typical constraints of cloud services, such as API rate limits, unpredictable costs, and the risk of service outages. The result is a powerful, reliable, and completely private AI tool that operates on the user's terms. This evolution proves that robust performance and uncompromising data sovereignty can finally coexist.

Practical Use Cases for the Privacy-Conscious Professional

The practical applications for professionals handling confidential data are transformative. A financial analyst, for instance, can leverage NativeMind's 'Smart Content Analysis' to instantly summarize proprietary market reports. Because all processing occurs locally, sensitive financial data and internal forecasts are never exposed to external cloud servers, completely mitigating the risk of data leaks or unauthorized monitoring.

Similarly, a researcher can employ 'Multi-tab context awareness' to chat with and synthesize information from multiple confidential research papers at once. This powerful on-device capability allows them to cross-reference findings and build upon protected intellectual property without ever transmitting it, ensuring their novel work remains secure.

For a journalist, the 'Writing Enhancement' feature is critical for drafting and proofreading sensitive stories. By guaranteeing that content involving confidential sources never leaves their local machine, NativeMind provides an essential layer of security. This offline-only operation protects source anonymity and the integrity of an investigation, a non-negotiable requirement for modern investigative reporting.

Enterprise Implementation: Building a Secure, Local AI Ecosystem

NativeMind's 'Enterprise Ready' claim extends beyond individual use, offering a robust framework for custom corporate and developer applications. Its open-source stack - built on Vue 3, WXT, and featuring native Ollama integration - provides a secure, transparent foundation for organizations to build their own private AI tools. This allows enterprises to retain full control over their AI infrastructure, models, and, most importantly, their data.

For specialized sectors, this capability is a strategic advantage. A legal firm, for example, can deploy NativeMind in conjunction with a self-hosted Ollama server running a fine-tuned model trained on legal precedents. This configuration allows lawyers to analyze confidential case documents and sensitive client communications directly within their workflow. By keeping all data processing firewalled within the firm's own network, it guarantees 100% compliance with attorney-client privilege and stringent data protection regulations, eliminating the risks associated with cloud-based legal tech platforms.

Similarly, an R&D department can leverage the open-source code to create a bespoke browser assistant for its engineering teams. This custom tool can be tailored to search and synthesize information exclusively from internal technical documentation, private code repositories, and proprietary research databases. Developers gain a powerful assistant that understands their unique environment without ever exposing trade secrets or intellectual property to an external service. This implementation achieves 'True Data Sovereignty', creating a secure innovation ecosystem shielded from industrial espionage and accidental data leaks. By enabling the development of purpose-built, entirely private AI solutions, NativeMind empowers organizations to harness the power of AI without compromising their most valuable assets.

Getting Started: Implementation and Model Flexibility

For immediate evaluation, NativeMind offers a zero-setup WebLLM pathway. This browser-based implementation uses WebAssembly to run the compact Qwen3-0.6B model directly on your machine, requiring no installation or configuration. It provides a frictionless method to test core functionality, such as content analysis and writing enhancement, and experience on-device AI instantly. This option is ideal for a quick trial, demonstrating the tool's core value proposition without any system overhead.

For a fully private and powerful AI assistant, the recommended pathway is integration with Ollama. This approach leverages your full system resources (CPU/GPU) for maximum performance and enables true self-hosting for complete control over your data and models. Installation unlocks the flexibility to download, run, and seamlessly switch between a wide array of advanced, open-source models. NativeMind supports leading models including Deepseek, Qwen, Llama, Gemma, Mistral, and Phi, allowing you to select the best tool for any task. This method transforms the browser extension into a robust, versatile assistant, allowing power users and developers to tailor the AI to specific workflows. By self-hosting with Ollama, you create a completely firewalled, high-performance AI ecosystem, achieving true data sovereignty and unlocking the full potential of local AI.

Conclusion: The Future is Local and Sovereign

The emergence of on-device solutions like NativeMind signals a critical shift toward a decentralized, private AI paradigm. This evolution decisively resolves the conflict between advanced AI capabilities and data sovereignty. As demonstrated, local processing now delivers state-of-the-art performance, eliminating cloud-related vulnerabilities without compromise. Professionals and enterprises can leverage powerful AI for sensitive tasks, confident that proprietary data remains securely on-premise. This guarantees complete control over intellectual property and confidential information.

For any organization where data security is non-negotiable, the conclusion is clear. Adopting a private, on-device AI framework is not merely an option; it is a strategic necessity for securing a competitive and operational advantage.