The Paradigm Shift: From IDEs to the Agentic Development Environment

"We are in the middle of a major platform shift, from manually writing and editing code to prompting an AI to do the work for us," states Warp CEO Zach Lloyd. This evolution from manual coding to prompt-driven development demands a new class of tooling. The future isn't about retrofitting old environments; it's about building natively for AI. This new paradigm requires an Agentic Development Environment (ADE)—a platform where AI is not an add-on, but the core operational primitive.

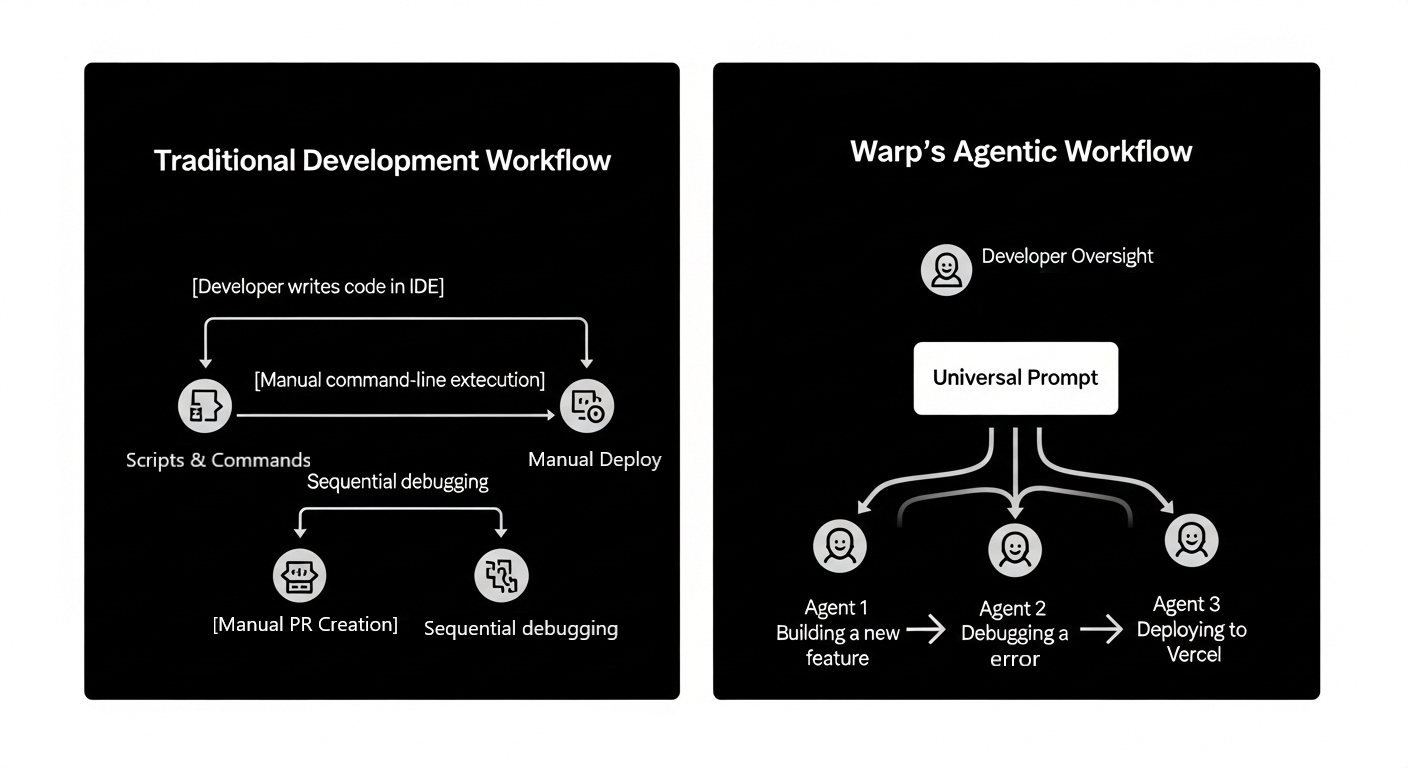

Current solutions fall short. Traditional IDEs treat AI as an afterthought, "bolting on" chat panels that exist separately from the primary coding workflow. This creates a disjointed experience, forcing developers to context-switch between writing code and conversing with a bot. Similarly, CLI-based agents, while powerful, are "buried" within the terminal. They lack a dedicated user interface, making complex, multi-step tasks and human-agent collaboration cumbersome and opaque. These tools were not designed for the interactive, iterative nature of agentic workflows.

Warp 2.0 emerges as the first purpose-built ADE, designed from the ground up to address these shortcomings. It's not a terminal with AI features; it's an agentic environment that uses a terminal-inspired interface. Warp 2.0 is architected for prompting as a primary input, managing multi-threaded agentic tasks, and facilitating seamless human-agent collaboration. It provides the native foundation required for developers to effectively build, test, and debug in an AI-first world.

Core Architecture: How Warp Delivers on Agentic Promises

Warp's architecture rests on four integrated pillars: Code, a multi-repository file system; Agents, for managing concurrent AI tasks; Terminal, a high-performance shell interface; and Drive, a collaborative space for sharing workflows. These components are unified by a universal input that seamlessly processes both natural language prompts and traditional shell commands, making AI a native function rather than an add-on.

This purpose-built design delivers state-of-the-art performance. Warp ranks #1 on Terminal-Bench, correctly executing 52% of tasks, and achieves a top-5 position on the rigorous SWE-bench Verified with a 71% pass rate. These benchmarks reflect its superior ability to comprehend and execute complex software engineering instructions.

The practical benefits for developers are immediate. The integrated architecture allows for coding across multiple repositories simultaneously within a single, cohesive interface. When an agent generates code, it appears as an interactive diff directly within Warp's native editor. Developers can review, edit, and apply changes in-place, completely eliminating the disruptive context-switching between a CLI agent and a separate IDE. This streamlines the entire development loop, from initial prompt to final commit, creating a fluid and truly agentic workflow.

Advanced Use Cases for Engineering Teams

The impact of this agentic approach is evident in complex, multi-step workflows across the development stack. For frontend tasks, a developer can provide an agent with a Figma mockup as image context. The agent analyzes the visual design—layout, color palette, and component structure—and autonomously generates a new, fully functional Next.js component. The code appears as an interactive diff directly in the editor, allowing the developer to review, refine, and apply the changes without leaving the environment, translating design concepts into production-ready code in minutes.

In a backend or DevOps scenario, the workflow is just as streamlined. An engineer can issue a high-level prompt like, “Address my Linear issue.” The agent connects to the Linear server via API, fetches the specific ticket details, and parses the requirements from the description. It then navigates the codebase to identify the relevant files, implements the necessary bug fix or feature, and can even draft a corresponding test case. This transforms a multi-hour manual process of context-gathering and coding into a single, supervised command.

This power extends across the full stack. A developer can use a single, continuous conversation to implement a feature that touches both a client and server repository. For instance, they can ask an agent to create a new API endpoint on the backend and simultaneously build the frontend UI to consume it. The agent orchestrates the entire change, managing dependencies and context across both codebases seamlessly within one interface.

The productivity gains are substantial. By running multiple agents in parallel to tackle such tasks, developers report saving 6–7 hours per week. Early testers have already leveraged Warp to generate over 75 million lines of code with a 95% acceptance rate, demonstrating the platform's immediate and reliable impact on real-world engineering velocity.

Context and Control: The Foundation of Trustworthy AI

Warp equips its agents with superior, multi-layered context that extends far beyond the local codebase. At its core is the Model Context Protocol (MCP), a secure framework that connects agents to external data sources and services. This allows them to pull requirements directly from a Linear ticket, analyze a Figma mockup, or reference a GitHub pull request. Developers can further enrich this context by uploading architecture diagrams as images, giving agents a high-level, visual understanding of system design. For institutional knowledge, Warp Drive enables teams to share specific rules, documentation, and preferred workflows, ensuring agents adhere to established engineering standards and best practices. This comprehensive contextual awareness is critical for generating accurate, relevant, and useful code.

Security and privacy are architected as core principles. Warp guarantees that external LLM providers do not train their models on user data or prompts. To mitigate risk, agent autonomy is fully configurable, allowing teams to set precise boundaries on what an agent can execute, from read-only analysis to file modification. User privacy is respected with a one-click toggle to disable all telemetry. For organizations with stringent compliance needs, enterprise plans offer advanced security controls. Zero Data Retention (ZDR) ensures that prompts and code are never stored on Warp’s servers. The Bring Your Own LLM (BYO LLM) feature enables companies to route all AI requests through their own private, self-hosted language models, creating a completely isolated and trusted development environment.